In the complex and ever-evolving world of search engine optimization (SEO), technical SEO remains important for achieving visibility and success. Understanding and mastering technical SEO is crucial because it forms the foundation for all other SEO strategies. This blog will guide you through the important aspects of technical SEO, from crawlability to indexation, and explain how these elements work together to improve your website’s performance in search engine results pages (SERPs).

What is Technical SEO?

Technical SEO refers to optimizing a website for better crawling and indexing, so that search engines can easily find, crawl, understand, and index your web pages. It also includes making your website faster and more user-friendly for mobile devices, which makes it easier for people to use.

Unlike on-page SEO, which focuses on content and keywords, or off-page SEO, which involves backlinks and social signals, technical SEO ensures that your site meets the technical requirements of search engines to boost organic rankings.

What is Crawlability?

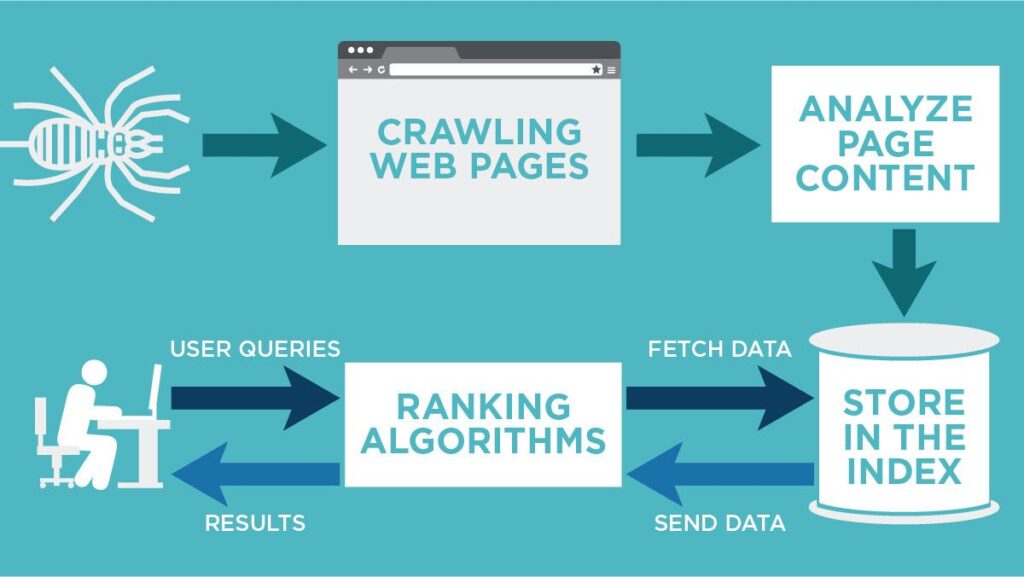

Crawlability is all about how easily a search engine can access and navigate through the content of a website. In simple words, search engine bots, like crawlers or spiders, are automated programs designed to roam the internet and collect information from web pages to put into search engine databases. For a website to be crawled effectively, it must have a structure that allows search engine bots to discover and access its pages easily.

Key Elements Affecting Crawlability

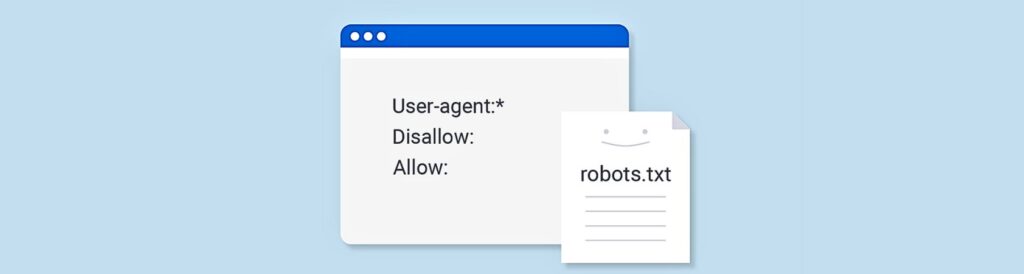

Robots.txt File:

This file tells search engine bots which pages they can or cannot crawl. A properly structured robots.txt file can prevent search engines from accessing unimportant or sensitive parts of your site, such as admin pages or duplicate content.

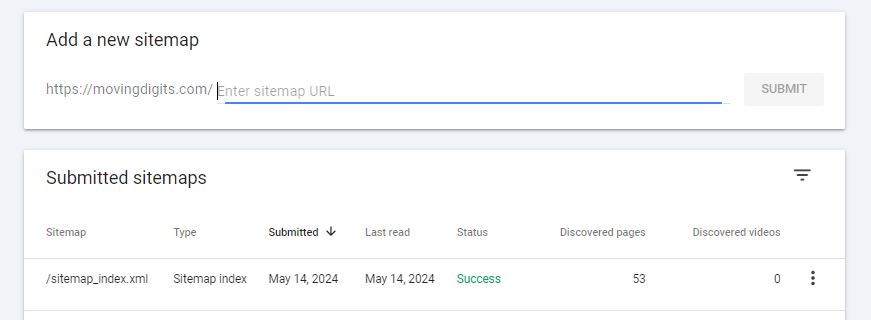

Sitemaps:

An XML sitemap is a file that lists all the important pages on your website, helping search engines discover and crawl them efficiently. Regularly updating your sitemap and submitting it to search engines can significantly improve your site’s crawlability.

Internal Linking:

A strong internal linking structure helps search engines discover new pages & find and navigate to all the pages on your site. It also distributes page authority across your site, which can improve the ranking of individual pages.

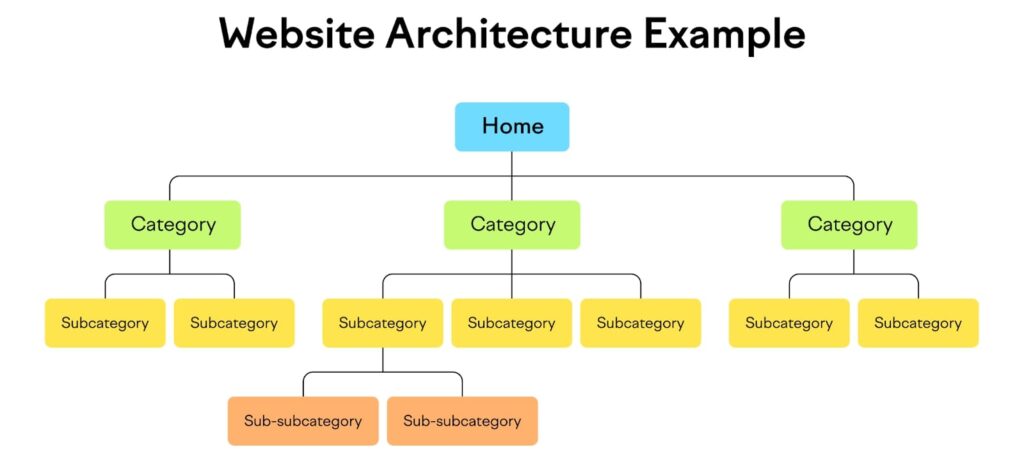

Site Architecture:

A well-organized site architecture with clear navigation paths facilitates efficient crawling. Using a hierarchical structure, such as categories and subcategories, helps search engine bots understand the relationship between different pages.

Server Errors and Redirects:

Ensure that your website is free of server errors (such as 5xx errors) and broken links (404 errors). Properly implemented redirects (301 redirects) are also important to guide search engines and users to the correct pages.

What is Indexation?

Indexation is the process by which search engines analyze and store the content of web pages in their databases – which is like a massive library with billions of web pages, to retrieve relevant results for user queries. After crawling a website, search engine bots decide which pages to include in their index based on various factors such as relevance, quality, and accessibility. Your page can appear in the SERPs once it has been indexed.

Key Elements Affecting Indexation

Meta Robot Tags:

Meta robot tags provide specific instructions to search engines on how to handle individual pages. Using “no index” tags on pages like admin panels or duplicate content can prevent them from being indexed. Example: <meta name=”robots” content=”noindex”>

Canonical Tags:

Canonical tags help prevent duplicate content issues by specifying the preferred version of a webpage. This is crucial for maintaining a clean index and ensuring that link equity is not split between duplicate pages. Example: <link rel=”canonical” href=”https://movingdigits.com/advanced-digital-marketing-course/” />

Pagination Tags:

Rel=”Next” and Rel=”Prev” – For paginated content, these tags help search engines understand the relationship between different pages in a series, aiding in the proper indexing of all pages.

Structured Data:

Implementing structured data (schema markup) can help search engines understand the context of your content, potentially improving how it is indexed and displayed in SERPs.

Quality Content:

Search engines prioritize high-quality, relevant content that provides value to users. Content that is unique, informative, and well-optimized for targeted keywords is more likely to be indexed and ranked prominently in search results.

Tools and Best Practices for Technical SEO

Tools for Monitoring Crawlability and Indexation

Google Search Console: This is an essential tool for monitoring your site’s crawlability and indexation. It provides insights into how Google is indexing your site and alerts you to any issues.

Screaming Frog SEO Spider: This tool helps you perform a detailed crawl of your site, identify broken links, analyze page titles and metadata, and generate XML sitemaps.

Ahrefs Site Audit: Ahrefs offers comprehensive site audits that help you identify technical issues affecting crawlability and indexation.

Best Practices for Technical SEO

Regular Audits: Conducting regular site audits to identify and fix crawl errors, broken links, and other technical issues is essential for maintaining optimal crawlability and indexation.

Optimize Site Speed: Page load time is a significant ranking factor. Optimize images, leverage browser caching, and minimize JavaScript to improve your site’s speed.

Mobile-Friendly Design: With Google’s mobile-first indexing, the mobile version of your website is considered the primary version. A mobile-friendly site ensures that search engines can effectively index your content for mobile users, improving your chances of ranking well.

HTTPS Protocol: Ensure your site uses HTTPS to provide a secure browsing experience. Google considers site security a ranking factor.

Clean URL Structure: Use a clear and logical URL structure that is easy for both users and search engines to understand. Avoid using complex query strings and ensure URLs are descriptive of the page content.

At Moving Digits, a top Digital Marketing Training Institute in Dadar, we offer Advanced Digital Marketing Courses with Job Placement that cover the intricacies of intricacies of technical SEO, On-page and Off-page SEO, Social Media Marketing (SMM), Search Engine Marketing (SEM), and more.

Conclusion:

From crawlability to indexation, technical SEO plays a vital role in ensuring that your website is accessible and understandable to search engines. By optimizing the various factors that influence these processes, you can enhance your site’s visibility in search engine results, leading to more traffic and better performance. Regular audits, proper configuration, and adherence to best practices are essential to maintaining a technically sound website that search engines can efficiently crawl and index. Remember, these practices not only improve search visibility but also enhance user experience, leading to better engagement and higher conversion rates. Mastering these aspects of technical SEO is fundamental to achieving long-term success in the competitive digital landscape.